Introduction

Before building a full stair detection system for my exoskeleton research, I ran a series of quick experiments to test how well depth cameras perceive the world. This blog post is a summary of those experiments — what worked, what didn’t, and what I learned about combining vision, depth, and real-world geometry in robotics.

As robotics and computer vision continue to advance, the ability for machines to perceive and understand the world in three dimensions is becoming increasingly important. Recently, I explored the capabilities of depth cameras and object detection models, aiming to answer a simple question: How do robots "see" the world? In this post, I'll share my early experiments with YOLO-based object detection, depth estimation, background removal, and even measuring a person's height using a 3D camera.

Building a Stair Detection Model with YOLOv8

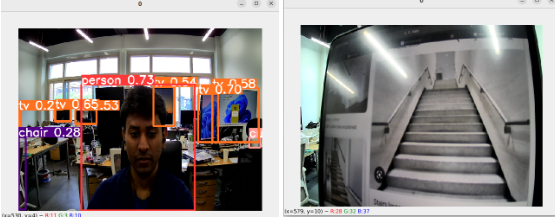

YOLOv8 Stair Detection Example

My first experiment focused on a classic computer vision challenge: object detection. Specifically, I wanted to see if I could train a model to detect stairs—a critical feature for any mobile robot navigating real-world environments.

- Data Collection: I started by downloading around 50 images of stairs from Google. While this is a small dataset by deep learning standards, it was enough for a proof-of-concept.

- Annotation & Preprocessing: Each image was manually annotated to mark the location of stairs. I used standard tools to create bounding boxes and prepare the data for training.

- Model Training: I chose YOLOv8 as my base model due to its speed and accuracy. Training was done on CPU, and even with limited hardware, the process took only about 30 minutes.

- Results: Despite the small dataset, the model performed surprisingly well in detecting stairs in new images, including those captured from my webcam. This suggests that with more data, the model could become robust enough for real-world deployment.

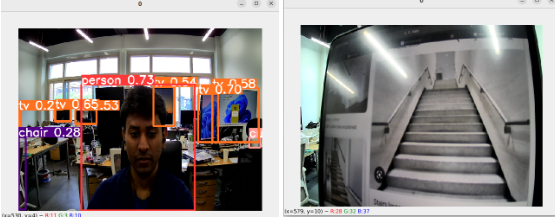

YOLOv8 Object Detection on Webcam

Lesson: Even with a modest dataset and basic hardware, modern object detection models like YOLOv8 can deliver impressive results. The key is careful annotation and preprocessing.

Object Detection and Distance Estimation with a Depth Camera

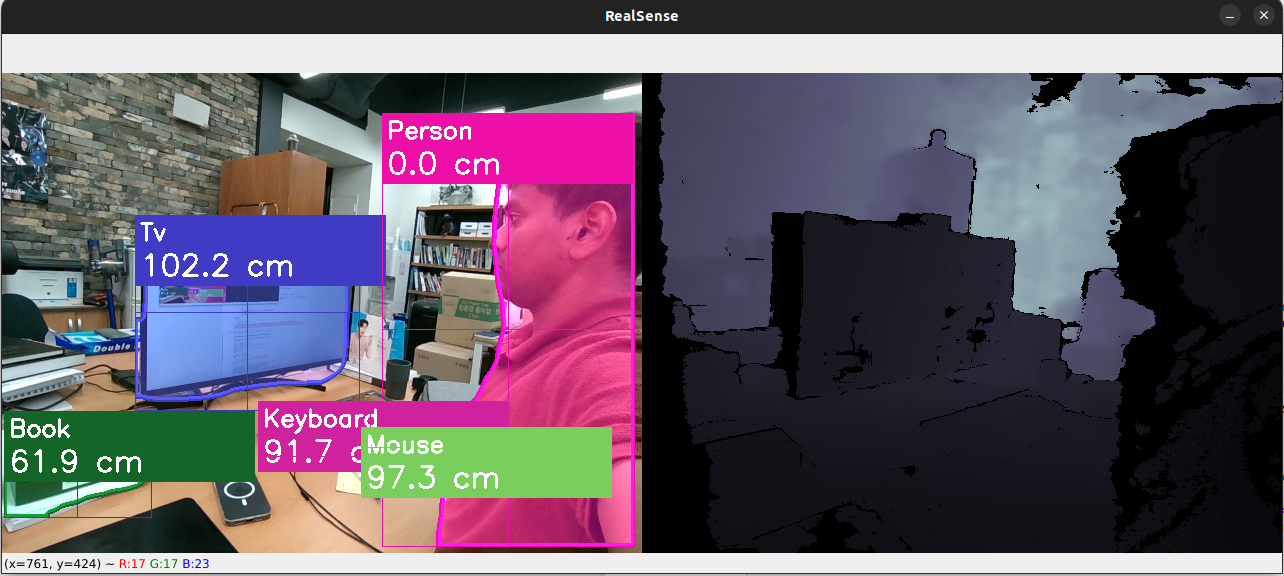

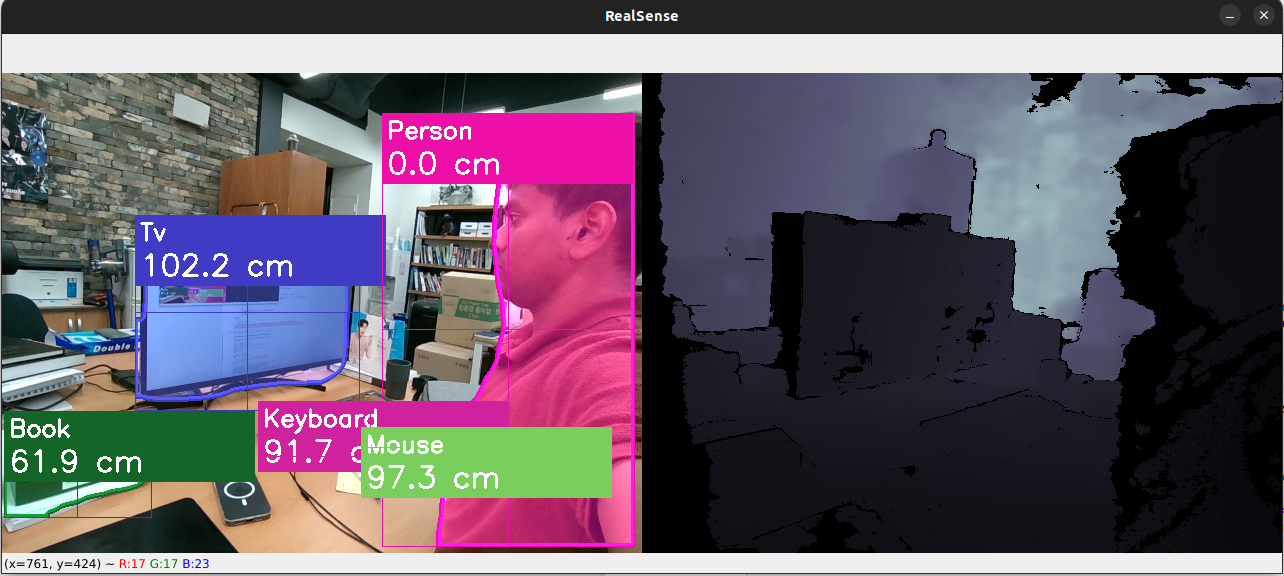

Object Detection and Distance Estimation with Depth Camera

After achieving promising results with 2D images, I wanted to add a new dimension—literally. Enter the Intel RealSense D455, a 3D depth camera capable of capturing both color and depth information.

- Object Detection in 3D: By combining the YOLOv8 model with the depth data from the camera, I was able to not only detect objects (like stairs) but also estimate their distance from the camera. This is crucial for applications like autonomous navigation, where knowing how far away an obstacle is can make all the difference.

- Implementation: The process involved mapping the 2D bounding boxes from YOLO onto the depth map provided by the camera. By averaging the depth values within each bounding box, I could estimate the real-world distance to each detected object.

Lesson: Depth cameras unlock a new level of perception for robots, enabling them to understand not just what is in their environment, but where it is in 3D space.

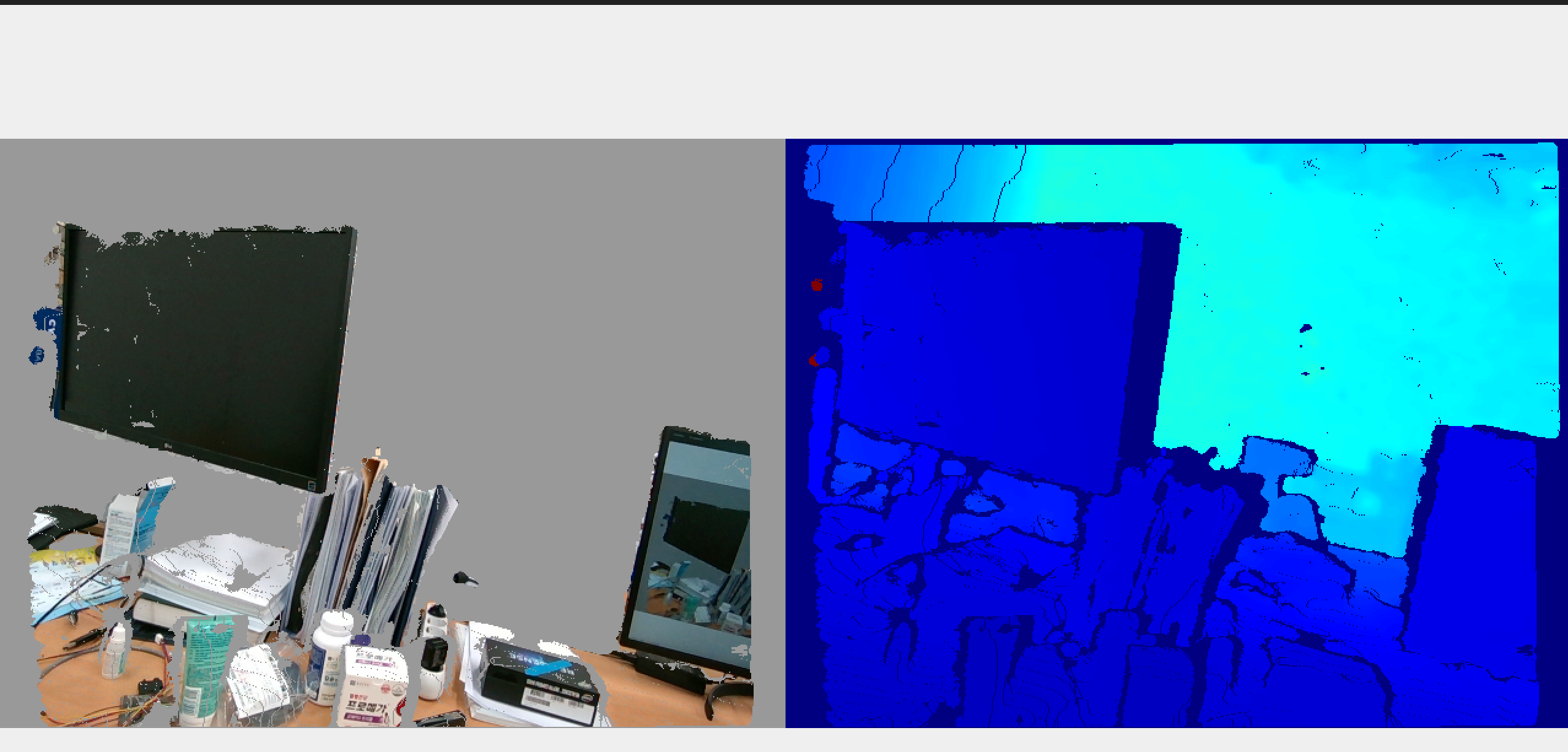

Background Removal Using 3D Data

Background Removal Using Depth Camera

Another fascinating application of depth cameras is background removal. Traditional background subtraction techniques often struggle with complex scenes, but depth data makes the task much easier.

- Approach: By thresholding the depth map, I could easily separate foreground objects (like people or stairs) from the background. This is especially useful in robotics, where isolating objects of interest is often a prerequisite for further analysis or manipulation.

- Results: The background removal was fast and robust, even in cluttered environments.

Lesson: Depth-based background removal is a powerful tool for simplifying scenes and focusing on what matters most.

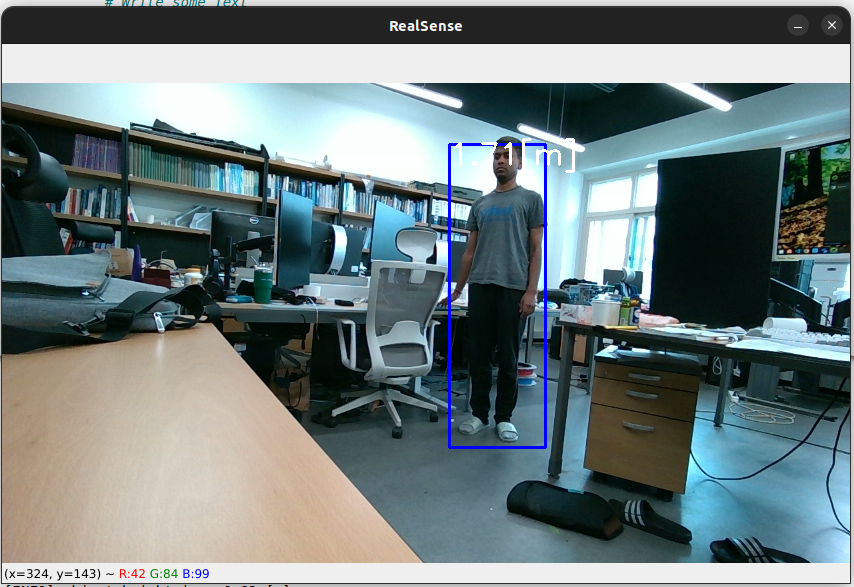

Measuring Person Height with Real-World Coordinates

Person Height Detection in Real Coordinates

For my final experiment, I used the RealSense D455 to measure a person's height in real-world units.

- Method: By detecting the top of the head and the bottom of the feet in the depth image, I could calculate the vertical distance between these points. The camera's intrinsic parameters and depth data made it possible to convert pixel measurements into real-world coordinates.

Lesson: The results were surprisingly accurate. Depth cameras can provide precise real-world measurements, opening up new possibilities for human-robot interaction and biometric analysis.

Conclusion

These early experiments have given me a glimpse into the world of 3D perception—a world where robots can not only see, but also understand their environment in ways that were once the exclusive domain of science fiction. From detecting stairs with a handful of images to measuring human height with centimeter-level accuracy, the combination of deep learning and depth sensing is truly transformative.

Next Steps: I plan to expand my dataset, experiment with more advanced models, and explore additional applications like gesture recognition and scene reconstruction. The journey has just begun, but the lessons learned so far are clear: with the right tools and a bit of curiosity, anyone can start to see the world like a robot.

This work was conducted at KAIST's ExoLab under the guidance of Prof. Kong.