The Model Context Protocol: Transforming AI Agents from Isolated Systems to Connected Ecosystems

Introduction

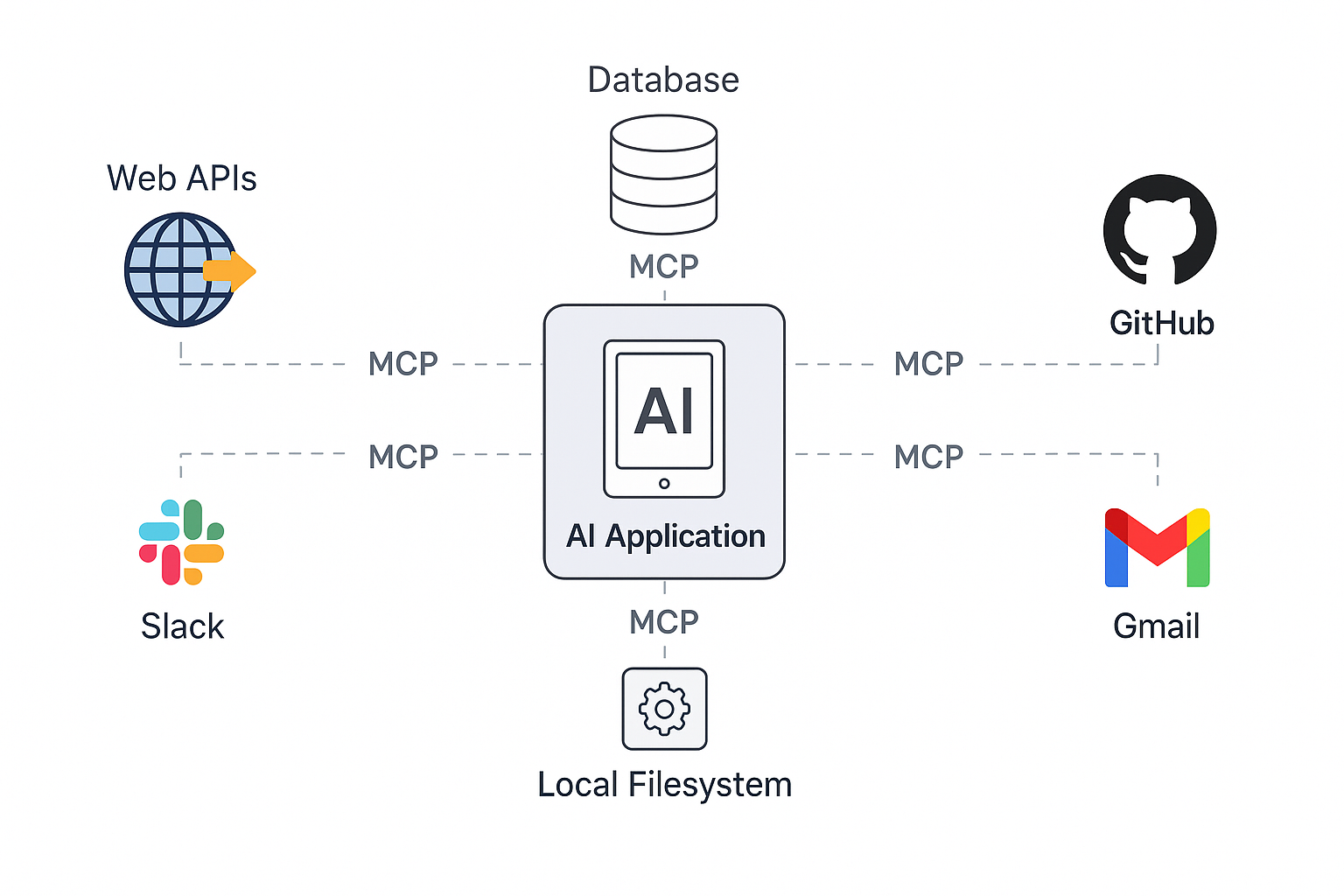

The Model Context Protocol (MCP) represents a fundamental shift in how AI agents interact with external systems and data sources. As an open standard introduced by Anthropic in November 2024, MCP addresses critical limitations that have constrained large language models (LLMs) to operate within isolated context windows, effectively transforming them from powerful but isolated systems into dynamic, connected agents capable of real-world interaction.

This comprehensive analysis explores the technical foundations, architectural principles, and transformative potential of MCP as the infrastructure layer that will enable the next generation of autonomous AI systems.

My insights into MCP come from attending the recent AI Engineers conference, MCP Track, where industry leaders, researchers, and engineers gathered to discuss, debate, and shape this emerging standard, combined with my own hands-on experience building MCP servers. This post provides an in-depth exploration into MCP, elucidating its foundational concepts and explaining why it's poised to significantly expand the capabilities of next-generation AI agents.

Genesis of MCP — Why Do We Need It?

Initially, many were skeptical of MCP's necessity. A common refrain was: "Why another protocol? Don't LLMs already support tool-calling?" However, upon closer inspection, these existing solutions reveal fundamental limitations.

Traditional function calling operates as a point-to-point solution where individual AI models invoke specific tools through custom implementations. This approach requires developers to build unique connectors for each data source or tool, resulting in fragmented, non-standardized integrations that cannot scale effectively across complex, multi-agent workflows.

In contrast, MCP was conceived from the ground up to solve these very issues by creating a dedicated protocol layer. MCP enables the standardized, composable connection of tools and agents into LLM workflows. Unlike simple function calling, which focuses on the moment a tool is used, MCP creates an entire ecosystem infrastructure that enables tools to be discovered, hosted, and reused across different applications and contexts.

The vision behind MCP is ambitious yet clear: establish a universal, extensible framework that empowers AI agents to break free from their context-box constraints, turning them into sophisticated, dynamically interactive, and contextually intelligent actors in broader digital ecosystems.

Core Concepts of MCP

The architecture and functionality of MCP are grounded in several key concepts, each crucial for enabling robust, agentic interactions between models, servers, and tools. Here, we dive deeper into each of these concepts, clearly outlining their technical significance.

Servers as Clients

A fundamental paradigm shift introduced by MCP is the idea that servers themselves can act as clients to Large Language Models (LLMs). Traditionally, the server is seen as a passive receiver of requests from clients. However, MCP enables servers to proactively initiate requests to models, effectively flipping the traditional client-server model.

Why is this significant?

- It enables complex, asynchronous workflows

- Servers can actively fetch context, make decisions dynamically, or trigger additional tools mid-execution

- It allows multi-stage and recursive interactions among models and servers

This concept can be clearly visualized through a hierarchical diagram:

Here, each node represents an MCP entity capable of both receiving and initiating calls, highlighting the protocol's flexibility.

Sampling

One of the most innovative—but currently least supported—primitives of MCP is sampling, where MCP servers request LLM completions via clients. This is a powerful inversion of traditional interactions, enabling servers to leverage LLM intelligence directly through structured requests.

Technical Workflow of Sampling:

- MCP Server → MCP Client: Server issues a sampling request

- MCP Client → LLM: Client forwards this request as a completion call to the LLM

- LLM → MCP Client: LLM processes and returns a completion response

- MCP Client → MCP Server: Client passes the completion response back to the server

Practical Examples of Sampling include:

- Summarizing external resources dynamically as part of workflows

- Categorizing or extracting structured data from unstructured inputs

- Agentic Server Tools that make decisions based on generated completions

The ability for servers to explicitly call LLMs provides enormous flexibility, enabling deeply dynamic and intelligent workflows not feasible with traditional tool-calling mechanisms.

Elicitation

Elicitation, a currently evolving MCP primitive, empowers tools to directly query users for additional context during their execution. In current workflows, tools only interact with the LLM. MCP extends this capability to allow direct tool-user interaction.

Why elicitation matters:

- Enables iterative refinement of requests

- Enhances alignment by seeking explicit user consent or clarification

- Allows for richer user experiences by integrating human-in-the-loop processes directly within agent workflows

Though still in development, elicitation illustrates MCP's commitment to creating adaptive, user-centric agent systems.

Multi-Agent Coordination

MCP significantly simplifies the orchestration of multi-agent systems, enabling structured communication among multiple autonomous agents or tools working in coordination.

Key elements of MCP multi-agent orchestration include:

- Dynamic Tools: Tools can enter and exit the workflow dynamically based on evolving server state or conditions

- Live Logging: Tools can stream intermediate execution states back to the client, ensuring transparency and observability during complex processes

- Tracing and Observability: Detailed logging and tracing standards allow auditing complex workflows—essential for debugging and regulatory compliance

Each agent/node in MCP systems seamlessly coordinates actions through standardized interactions, ensuring scalability and robustness.

MCP Implementation: A Practical Guide

Let's explore how to implement MCP in practice with clear, production-ready examples. We'll build a simple MCP server that demonstrates core concepts while following industry best practices.

1. Basic MCP Server Implementation

Here's a clean, well-structured MCP server implementation:

from typing import Any, Dict, List, Optional

from dataclasses import dataclass

import asyncio

import logging

from mcp import Server, Tool, TextContent

# Configure logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

@dataclass

class MCPConfig:

"""Configuration for MCP server"""

server_name: str

version: str

description: str

tools: List[Tool]

class MCPServer:

"""MCP server implementation"""

def __init__(self, config: MCPConfig):

self.config = config

self.server = Server(config.server_name)

self._register_tools()

def _register_tools(self) -> None:

"""Register all available tools with the server"""

for tool in self.config.tools:

self.server.register_tool(tool)

logger.info(f"Registered tool: {tool.name}")

async def start(self) -> None:

"""Start the MCP server"""

try:

await self.server.start()

logger.info(f"MCP Server {self.config.server_name} v{self.config.version} started")

except Exception as e:

logger.error(f"Failed to start MCP server: {e}")

raise

# Example tool definitions

def create_tools() -> List[Tool]:

"""Create and return a list of MCP tools"""

return [

Tool(

name="analyze_text",

description="Analyze text content and extract key information",

input_schema={

"type": "object",

"properties": {

"text": {"type": "string", "description": "Text to analyze"},

"analysis_type": {

"type": "string",

"enum": ["sentiment", "keywords", "summary"],

"description": "Type of analysis to perform"

}

},

"required": ["text", "analysis_type"]

}

),

Tool(

name="process_data",

description="Process and transform data according to specified rules",

input_schema={

"type": "object",

"properties": {

"data": {"type": "array", "description": "Data to process"},

"operation": {

"type": "string",

"enum": ["filter", "sort", "transform"],

"description": "Operation to perform"

},

"rules": {"type": "object", "description": "Processing rules"}

},

"required": ["data", "operation"]

}

)

]

async def main():

"""Main entry point for the MCP server"""

config = MCPConfig(

server_name="example-mcp-server",

version="1.0.0",

description="Example MCP server demonstrating best practices",

tools=create_tools()

)

server = MCPServer(config)

await server.start()

if __name__ == "__main__":

asyncio.run(main())2. Tool Implementation Best Practices

When implementing MCP tools, follow these key principles:

-

Clear Interface Definition:

- Use JSON Schema for input validation

- Document all parameters and return types

- Include examples in documentation

-

Error Handling:

- Implement proper error boundaries

- Return structured error responses

- Log errors with context

-

Performance Considerations:

- Use async/await for I/O operations

- Implement timeouts for long-running operations

- Cache results when appropriate

Here's an example of a well-implemented MCP tool:

from typing import Dict, Any, List

import asyncio

from mcp import Tool, TextContent

class TextAnalysisTool:

"""Example of a MCP tool"""

def __init__(self):

self.timeout = 30 # seconds

self.cache = {}

async def analyze_text(self, text: str, analysis_type: str) -> Dict[str, Any]:

"""Analyze text with proper error handling and performance considerations"""

try:

# Check cache first

cache_key = f"{text}:{analysis_type}"

if cache_key in self.cache:

return self.cache[cache_key]

# Perform analysis with timeout

async with asyncio.timeout(self.timeout):

result = await self._perform_analysis(text, analysis_type)

# Cache the result

self.cache[cache_key] = result

return result

except asyncio.TimeoutError:

return {

"error": "Analysis timed out",

"status": "error",

"code": "TIMEOUT"

}

except Exception as e:

return {

"error": str(e),

"status": "error",

"code": "INTERNAL_ERROR"

}

async def _perform_analysis(self, text: str, analysis_type: str) -> Dict[str, Any]:

"""Internal method for performing text analysis"""

# Implementation would go here

return {

"status": "success",

"result": {

"analysis_type": analysis_type,

"text_length": len(text),

"processed_at": "2024-03-20T12:00:00Z"

}

}

# Tool registration

text_analysis_tool = Tool(

name="analyze_text",

description="Analyze text content with proper error handling and caching",

input_schema={

"type": "object",

"properties": {

"text": {"type": "string", "description": "Text to analyze"},

"analysis_type": {

"type": "string",

"enum": ["sentiment", "keywords", "summary"],

"description": "Type of analysis to perform"

}

},

"required": ["text", "analysis_type"]

}

)MCP Specification — Current State and Future

The MCP ecosystem is rapidly evolving, with several core functionalities already stabilized, while exciting innovations are actively being drafted and discussed.

Stable Today:

Streamable HTTP: Enables continuous streaming responses, crucial for real-time observability and responsiveness in enterprise-grade applications.

Updated Auth: Robust enterprise-grade authorization and identity-provider integration to ensure secure, compliant interactions.

In Progress:

Community Registry: A structured solution for discovering and installing MCP tools and servers. This registry supports both public and private repositories, facilitating easy integration and adoption.

Elicitation: User-interactive primitive discussed above, currently drafted for explicit user feedback and context clarification.

Future Directions:

Dynamic MCP Server Generation: Models may soon generate their own MCP servers dynamically, autonomously adapting their own tooling environments on-the-fly. This would represent a significant leap toward truly adaptive AI infrastructures.

This evolving specification landscape highlights the MCP community's active pursuit of standardization, innovation, and scalability.

Building MCP Servers — The Startup Opportunity

We are in the earliest stages of MCP adoption. Currently, the predominant use cases have been limited primarily to developer tooling. However, the real opportunity lies beyond developer-centric applications—in leveraging MCP to solve domain-specific, real-world problems.

"We are very, very early—so build servers."

Consider the vast potential in sectors like:

- Education: Intelligent tutoring systems, adaptive learning agents

- Robotics: MCP-driven autonomous robots with dynamic tool orchestration

- Finance & Legal: Secure, observable agent workflows for compliance, auditing, and complex decision-making tasks

- Healthcare: AI agents that can securely interface with medical records and diagnostic tools

- Content Creation: Dynamic content generation with real-time fact-checking and source verification

Crucially, when designing MCP servers, remember there are three primary users:

- End users: Who directly benefit from intelligent workflows

- Client developers: Integrating MCP into broader systems

- The Models themselves: Which leverage tools and servers to execute complex reasoning tasks

Servers should thoughtfully expose tools and functionalities explicitly designed around anticipated model prompts and user scenarios.

The key insight for server developers is recognizing that the AI model itself becomes a "user" of the server interface, requiring careful consideration of how tools and resources are designed to facilitate effective AI interaction. This perspective shift from human-centric to AI-centric interface design opens new possibilities for creating highly optimized integration points that leverage the unique capabilities and limitations of current AI systems.

Key takeaway: The MCP ecosystem is a largely unexplored startup territory. Building intuitive, secure, and domain-specific MCP servers today positions startups at the forefront of the next major evolution in AI infrastructure.

Challenges & Design Considerations

While MCP opens exciting possibilities, building robust and performant MCP-driven systems involves careful consideration of several critical challenges and design aspects.

Tools Reflect Actions—Quality Over Quantity

It is tempting to assume that providing more tools inherently results in more capable agents. However, each tool represents an additional decision branch for an LLM, increasing complexity and potentially reducing overall performance due to increased ambiguity and cognitive overload for the model.

Simply put, more tools ≠ better agents.

Instead, MCP server developers must focus on carefully curating a focused set of powerful, composable, and contextually relevant tools.

Scope Tool Availability

To mitigate performance degradation from excessive tooling, MCP implementations should strictly manage tool availability based on specific contexts or tasks. A practical example is the approach adopted by VS Code, where tool selection is scoped explicitly to the user's context, preventing model overload and ensuring higher efficiency and accuracy of tool invocation.

A strategic design approach could look like this:

- Identify typical prompts and tasks

- Provide just enough tooling to complete these tasks efficiently

- Dynamically adjust available tools based on user role, context, or workflow stage

Logging, Observability, and Auditing

Another fundamental design consideration is the incorporation of robust logging, tracing, and observability mechanisms. Given the complexity of multi-agent interactions enabled by MCP, transparency becomes crucial. Logs must capture the full lifecycle of interactions clearly:

- Auditability: Ensuring compliance and transparency

- Observability: Real-time insights and debugging capabilities

- Traceability: Understanding decisions, agent interactions, and pinpointing failures swiftly

MCP developers must implement comprehensive tracing and logging from day one, as retroactively introducing observability often proves complex and inefficient.

Security and Authentication

As MCP servers become gateways to sensitive data and critical systems, security becomes paramount. Key considerations include:

- Secure authentication mechanisms for both users and AI agents

- Fine-grained permissions that limit tool access based on context

- Data isolation to prevent cross-contamination between different workflows

- Rate limiting and abuse prevention to protect against malicious usage

Real-World Applications and Case Studies

Personal Knowledge Management

One of the most compelling early applications of MCP is in personal knowledge management. Imagine an MCP server that can:

- Index and search through your personal documents, emails, and notes

- Provide contextual information retrieval during conversations

- Create connections between disparate pieces of information

- Maintain privacy while enabling powerful AI assistance

Enterprise Automation

Large organizations are beginning to explore MCP for:

- Automated compliance reporting that can access multiple data sources

- Intelligent customer service that routes queries to appropriate human experts

- Dynamic workflow orchestration that adapts based on real-time conditions

- Cross-system data integration without traditional API overhead

Research and Development

Academic and research institutions are leveraging MCP for:

- Literature review automation that can access and synthesize papers

- Experimental data analysis with real-time hypothesis generation

- Collaborative research platforms that enable AI-human partnerships

- Reproducible research workflows with full audit trails

Conclusion

MCP represents a transformative step in the evolution of AI-driven workflows, providing a structured, standardized protocol layer for intelligent interactions between models, tools, agents, and users. By enabling servers to act as dynamic clients, facilitating rich multi-agent orchestration, and ensuring robust observability and auditability, MCP directly addresses many core limitations currently faced by LLM systems.

The implications extend far beyond technical improvements. MCP is creating the infrastructure for a new generation of AI applications that can seamlessly integrate with existing systems, adapt to complex workflows, and scale across organizations and industries.

Now is the ideal moment to start building MCP-powered infrastructures. Whether you are a developer, entrepreneur, or researcher, the opportunities presented by MCP are vast and largely unexplored. The community is young, resources are rapidly emerging, and the potential impact across domains like education, robotics, finance, healthcare, and beyond is immense.

The future of AI agents is not just about smarter models, it's about creating the infrastructure that allows these models to interact meaningfully with the world around them. MCP provides that infrastructure.