When designing control systems for powered exoskeletons, one of the most critical challenges is creating intelligent assistance that enhances human capabilities without overriding human judgment. In this post, I'll explore our approach to developing a depth vision-based environment recognition system that provides intelligent recommendations while keeping the human pilot in ultimate control.

The Vision: Augmented Human Intelligence

The goal isn't to create a fully autonomous system, but rather an intelligent assistant that can:

- Perceive and analyze the surrounding environment in real-time

- Suggest optimal paths and navigation strategies

- Alert users to potential hazards or challenging terrain

- Adapt gait parameters based on detected terrain types

- Always defer to human judgment when conflicts arise

This human-centric approach ensures safety while reducing the cognitive load on the exoskeleton pilot.

System Architecture: Layered Intelligence

Our system employs a layered architecture with distinct responsibility zones:

Perception Layer

The foundation consists of:

- Depth Camera: Primary sensor for 3D environment mapping

- IMU Integration: Provides orientation and motion compensation

- Sensor Fusion: Combines multiple data streams for robust perception

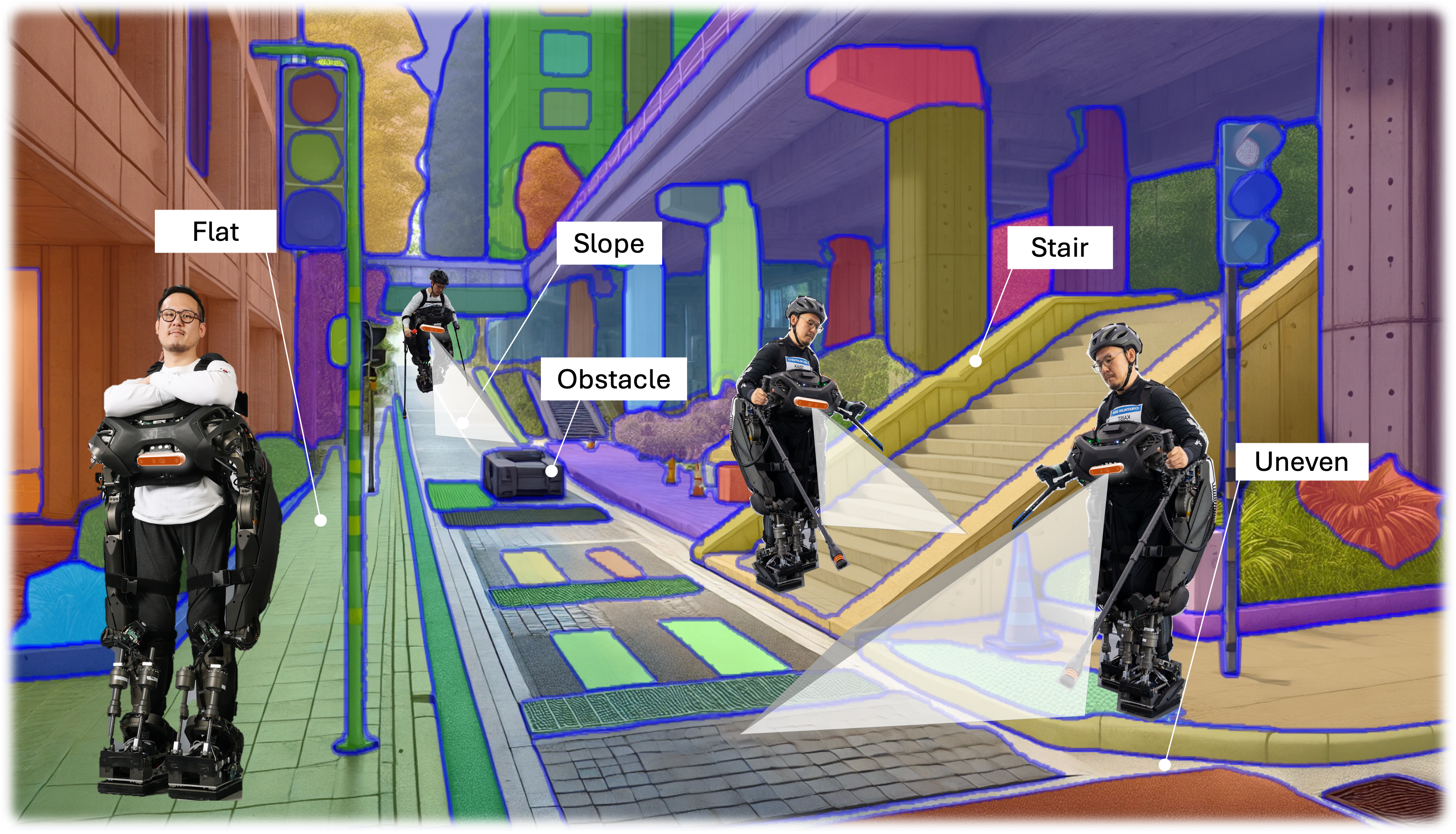

The perception layer classifies terrain into categories: flat surfaces, uneven ground, stairs, slopes, and obstacles. This real-time classification forms the basis for all higher-level decisions.

Decision Layer

This intermediate layer processes perception data and generates recommendations:

- Maintain Normal Gait: For safe, predictable terrain

- Adjust Stride & Step Height: For stairs and uneven surfaces

- Suggest Detour: When obstacles are detected

User Control Layer

The critical human interface where the pilot maintains ultimate authority:

- User Approves: System executes recommended actions

- User Rejects: System returns to manual control

- User Input: Manual overrides are always respected

Technical Implementation: Depth + IMU Fusion

Technical Pipeline

Vision Algorithm Pipeline

The core vision processing follows a sophisticated pipeline:

- Depth Data Pre-processing: Raw depth data is filtered and cleaned to remove noise

- Coordinate Transformation: Converting camera coordinates to ground-relative reference frames

- Terrain Analysis: Classifying surface types and detecting navigation hazards

- Next Footstep Estimation: Predicting optimal foot placement locations

Complete Algorithm Architecture

Complete Depth Vision Algorithm

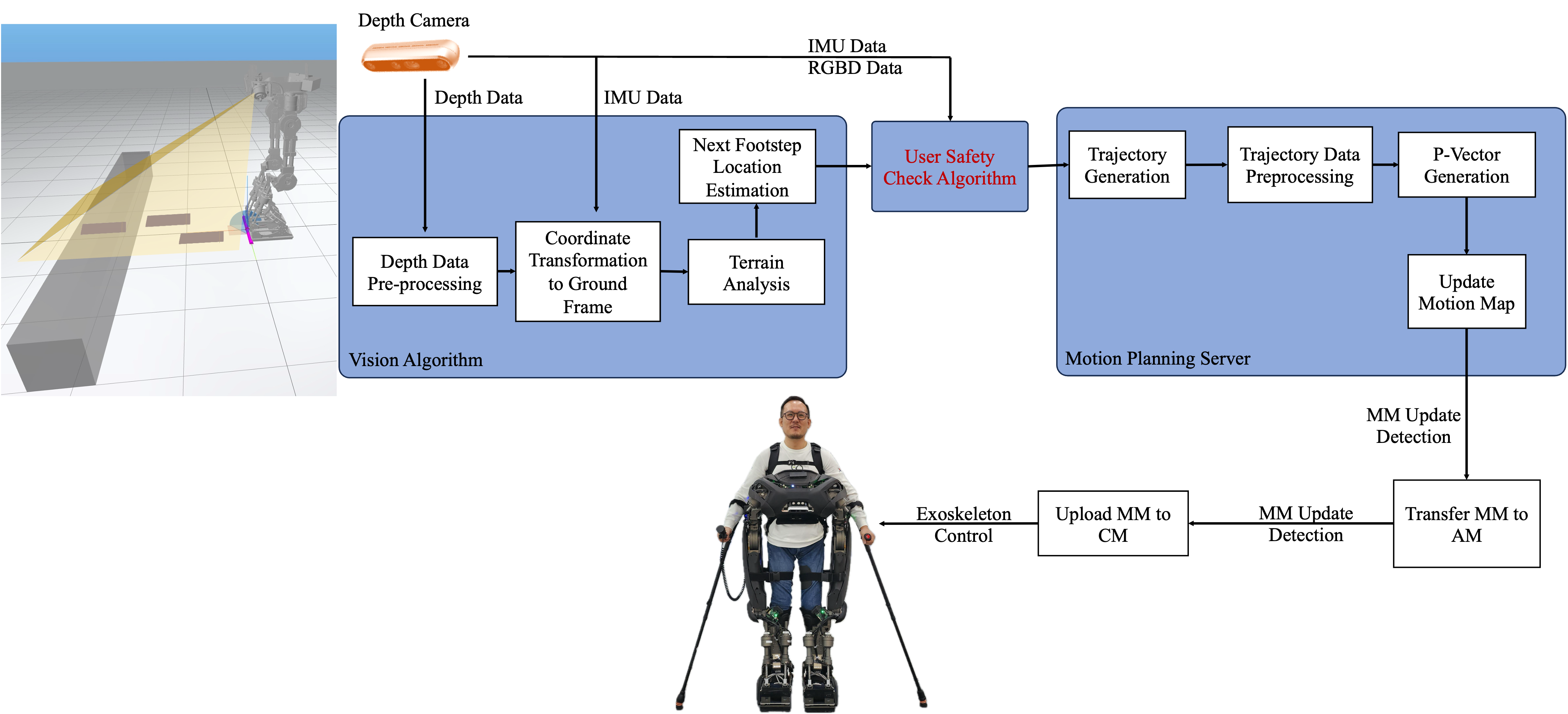

The complete algorithm implementation showcases the full integration between vision processing and exoskeleton control. Starting from the left, a depth camera captures 3D environmental data which feeds into the Vision Algorithm block. This processes the raw depth information through several critical stages:

Vision Processing Pipeline:

- Depth Data Pre-processing: Filters noise and validates sensor readings

- Coordinate Transformation to Ground Frame: Converts camera-relative coordinates to world coordinates using IMU data

- Terrain Analysis: Classifies surfaces and identifies navigation challenges

- Next Footstep Location Estimation: Predicts optimal foot placement for upcoming steps

The processed vision data then flows to the User Safety Check Algorithm (highlighted in red), which serves as a critical safety gate. This component validates that all system recommendations fall within safe operational parameters and biomechanical constraints.

From there, the Motion Planning Server takes over, generating trajectories, preprocessing movement data, and creating P-vectors for actuator control. The system continuously updates its motion map based on new environmental information, creating a closed-loop control system that adapts to changing terrain conditions.

The human pilot remains central to this process—positioned at the heart of the system—with the ability to approve, reject, or override any automated recommendations. This architecture ensures that sophisticated AI assistance enhances rather than replaces human decision-making capability.

Motion Planning Integration

The vision system seamlessly integrates with our motion planning server:

- Trajectory Generation: Creating safe walking paths based on terrain analysis

- Trajectory Preprocessing: Optimizing paths for stability and efficiency

- P-Vector Generation: Producing control signals for the exoskeleton actuators

- Motion Map Updates: Continuously updating the environmental model

Safety Architecture

A dedicated User Safety Check Algorithm sits between perception and execution, ensuring:

- No recommendations exceed safe operating parameters

- All suggestions are validated against biomechanical constraints

- Emergency stops are triggered when necessary

Real-World Challenges and Solutions

Implementing depth vision for exoskeleton control presents unique challenges that don't exist in traditional robotics applications.

Challenge 1: Computational Constraints

Problem: Real-time vision processing is computationally expensive, but exoskeletons have strict power and weight budgets.

Solution: We use NVIDIA Jetson Orin for edge computing, optimizing our algorithms for real-time performance while minimizing power consumption. The key is intelligent processing—we only analyze relevant regions of the depth image and use temporal consistency to reduce computational load.

Challenge 2: Environmental Robustness

Problem: Depth cameras struggle in bright sunlight, with reflective surfaces, and during fast movements.

Solution: Multi-modal sensor fusion combines depth data with IMU readings to maintain accuracy. When depth data is temporarily unavailable, the system relies on previous observations and inertial measurements to maintain situational awareness.

Challenge 3: Occlusion Management

Problem: The user's hands, crutches, or other objects can block the camera's view.

Solution: Strategic camera placement and temporal mapping. Short-term occlusions (1-2 seconds) are handled by using recent terrain maps, while the system alerts the user to longer obstructions.

Challenge 4: Motion Blur and Dynamic Environments

Problem: Fast movements create motion blur, and dynamic environments change rapidly.

Solution: Adaptive exposure control and prediction algorithms that anticipate terrain changes based on movement patterns.

Human-Centered Design Philosophy

What sets our approach apart is the emphasis on human agency and decision-making.

The Pilot Remains in Control

Unlike fully autonomous systems, our design ensures:

- Transparent Recommendations: Users always understand why the system suggests certain actions

- Easy Override: Manual control is instantaneous and takes precedence

- Graceful Degradation: System failures don't compromise safety

Cognitive Load Reduction

The system reduces mental burden by:

- Proactive Alerts: Warning about upcoming challenges before they become critical

- Contextual Suggestions: Providing relevant options based on current terrain

- Learning Adaptation: Adjusting recommendations based on user preferences

Performance and Validation

Our testing in controlled environments has shown:

- 95% accuracy in terrain classification across various surface types

- <100ms latency from perception to recommendation

- Zero false positives in safety-critical scenarios during testing

- Significant reduction in pilot cognitive load during navigation tasks

This work was conducted at KAIST's ExoLab under the guidance of Prof. Kong.